Recently, one of our VC clients asked if we could build a simple, LLM-based tool for extracting summaries from pitch decks in order to create a searchable pitch deck database.

This was an exciting project (that is still ongoing!) which will likely be helpful for other firms, so we’re detailing our approach to solving our client’s problem in this post.

Background

Before going any further, let’s explore the client’s ask for a searchable pitch deck database in the first place:

Pretend it’s February 2023 and you’re an associate at a seed-stage VC firm. ChatGPT has been simmering in public view for a couple months now and as a result, your industry is ablaze with LLM startups seeking funding. You see ideas coming in from many industries: AI for summarizing doctor appointments, AI for automating database queries, AI for conversing with your dog (sidebar: mine just wants to get outside or eat, preferably at the same time), and more. In your pre-investing career, you worked as a credit research analyst at a big bank, so your interest is piqued when a pitch for a product that scans financial filings to automatically generate credit ratings hits your inbox. You schedule a meeting with the founder but end up passing.

Fast forward to today and another pitch related to automatic credit rating generation hits your inbox. You’re interested in the new pitch, and you’d really like to jog your memory about the last, similar company’s approach. You try searching your inbox for remnants of the old conversation to no avail.

Wouldn’t it be nice if you had a searchable database of all of the previous pitch materials, to revisit old opportunities when you needed to?

Tools used

Note: you skip this section without losing any important context, but our hope is that the curious reader (maybe with some help from ChatGPT) will be able to give this a shot on their own. Programming simple applications has become much easier over time - we chose an awesome set of tools that don’t require years of experience to use for this project.

FastHTML

Our team loves the python programming language. It’s approachable, productive, and is a first-class citizen of many AI and data-oriented tools.

When we saw the recent announcement about FastHTML, a new web framework that lets you write web apps in pure python, we knew that it would be a great fit for the project. If you’re a user of Flask, Streamlit, Plotly Dash, FastAPI, or have done any web development in the past, you should definitely give FastHTML a spin! You’ll be up to speed in minutes (a testament to the team behind FastHTML). Bonus: support for HTMX is available out of the box.

OpenAI API

If you’ve used ChatGPT, you’re probably familiar with what language models can / can’t do well at this point. ChatGPT isn’t the only way to interact with OpenAI’s models, however. We needed to work with LLMs directly from our application code, so we decided to use the OpenAI apis for this project.

DuckDB

DuckDB thinks that big data is for quacks! This sentiment rang true for this project. DuckDB is easy to set up as a single-file database, it embraces the python ecosystem, and it has a built-in function for the search feature that we needed to implement for this project. For more details, you can check out this blog post from MotherDuck, which definitely inspired our choice.

Building the product

Deck scanning

Our first goal was to figure out how to use GPT to scan pitch decks in order to generate concise summaries. Without this feature, there wouldn’t be any data to archive and search over time.

Prompting

“Prompting” refers to the text-based instructions that you provide to a language model so that it more accurately predicts the output that you are looking for. When you type a question into ChatGPT, such as “Can you please give me some names for my AI pitch deck scanning SaaS tool”, there is probably some sort of prompt that gets prefixed to your question, such as (simplifying of course) “You are a helpful AI chatbot. You will receive questions from users, and you must respond to the questions as accurately and concisely as possible. If you don’t know the answer, hallucinate confidently!” (just kidding on the last part).

Remember, LLMs don’t think, they need prompts or guard rails to keep the model outputs in line with what you’re looking for. We tried several different prompts for this project, but an approach that worked surprisingly well was as follows:

Prompt one (note: we pass the model an image here - make sure that the model you’re using can accept image inputs):

“You are a helpful AI assistant at a VC firm. You are assisting a VC analyst at your firm that needs to review pitch decks. You will be given an image of a slide from a pitch deck, and you will describe it to the analyst. The analyst is very busy, so you need to make sure that your descriptions are as concise as possible without leaving out any important information.”

Prompt two (note: we pass the descriptions of all slides to this prompt):

“You are a helpful VC analyst. You will be passed a textual description of a pitch deck and your goal is to create a short summary of the business that is being pitched. Your summary will be converted to a vector embedding and then use for semantic search, so attempt to be as specific as possible when describing the business, market, and any other info from the deck.”

Pretty interesting, right? Here’s a quick breakdown of why we think that this approach is working well:

- Breaking the deck into slides

In OpenAI’s prompt engineering guide, they make it clear that breaking tasks down into manageable pieces is helpful for models. We made sure to have the model look at one slide at a time when taking its first pass at the deck.

- Instructions without room for misinterpretation

We told the model that it is preparing the descriptions for an analyst at a VC firm. We decided to do this to ensure that things like charts and images were described in an appropriate way for the VC use case.

- Separation of tasks

If you agree with us that 1) reading through a deck and 2) summarizing the deck are two different tasks, then you’ll understand why we broke this workflow into two main prompts. It’s helpful to think of each prompt in a chain of prompts as a station on an assembly line, meant for making a focused, incremental change to your input data.

Description embedding

After a pitch deck gets passed through the chain of prompts that we described above, we are left with a concise description of the business’s pitch.

Remember that our goal was to make these descriptions searchable (so that people will always be able to find old decks when needed), so our next step was to run the descriptions through an embedding model.

If you haven’t heard about semantic search before, here is a short description from Pinecone. The general idea is that semantic search is focused on matching meaning, instead of matching keywords.

Embedding models take text as input and return numerical representations of the text as output. After doing this, it’s simple to compare how closely related two pieces of text are, based on the similarity of their numerical representations.

We stuck with OpenAI as an embedding model provider as well, but there are many options to choose from.

After turning the text into an embedding, the only step left was to store all of the information that we wanted to track in a database, and finally to create a friendly user interface for running the application.

Database

As mentioned before, we chose to use DuckDB for this project. It’s an awesome, lightweight, single-file database, similar to SQLite but optimized for analytics instead of transactions. It doesn’t really seem like the developers of DuckDB are intending for it to be used in web applications, but because DuckDB supports arrays (the data type that we need for embeddings) and has a built in cosine similarity function (what we need for our search capabilities) it was a great choice for the project.

Because of the relatively narrow scope of the application, we just included a few fields. We store the deck (so that it can be easily downloaded), the description and the embedding.

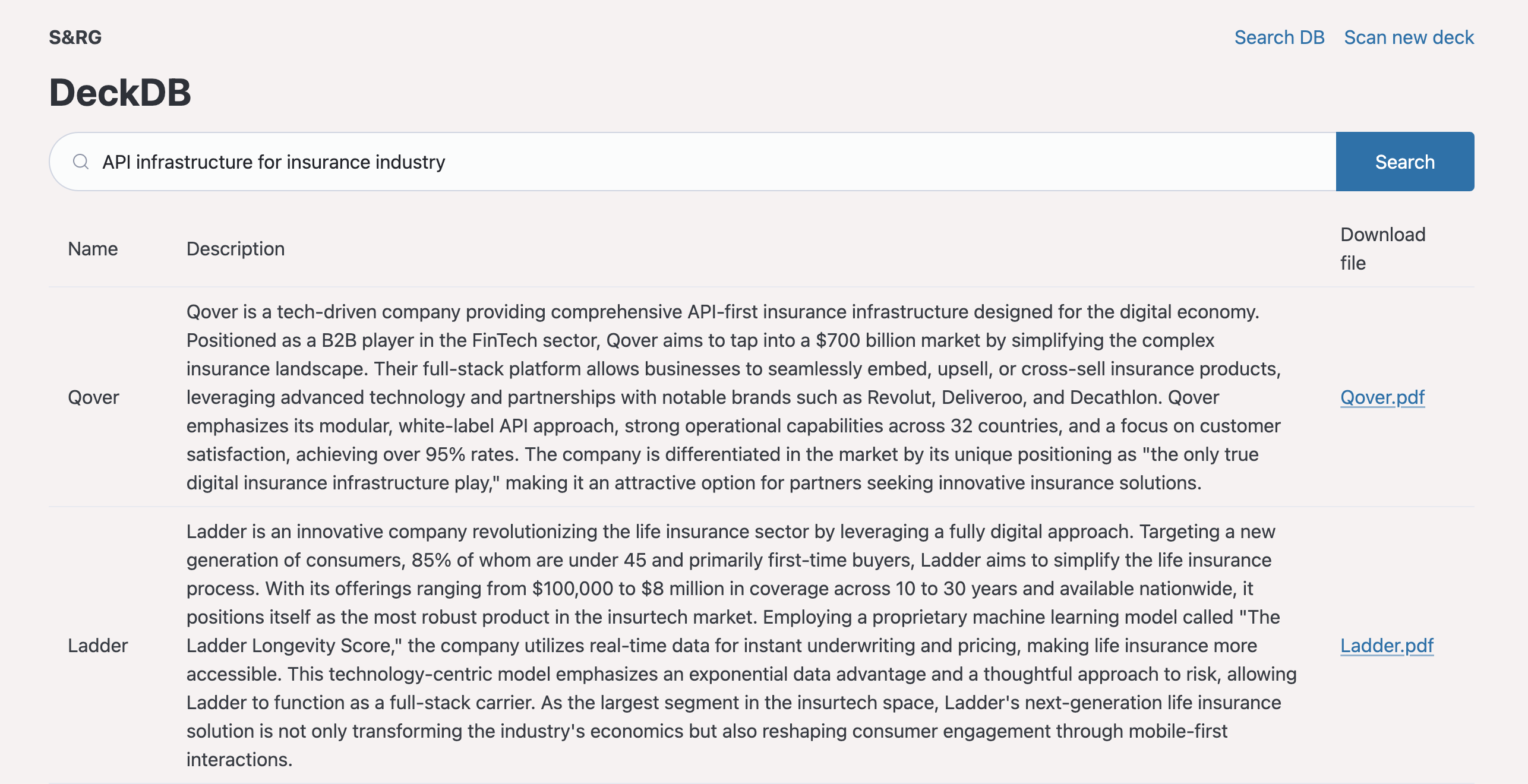

The user interface

The last step was to build a user interface with two tabs. In order to build the interface, we used FastHTML. The first tab allows users to upload a new deck for scanning. The second tab (the site index page) provides a search bar that our client can use to search the database. FastHTML made it easy to write the app in pure python. After this, we were done!